Tuning Quick Start

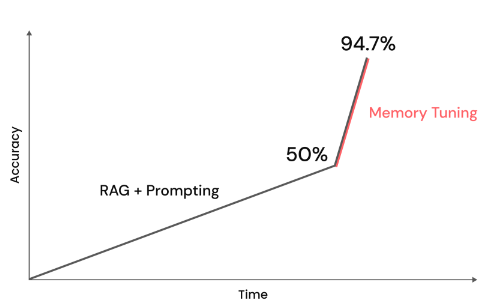

When running inference, with prompt-engineering and RAG, is not enough for your LLM, you can tune it. This is harder but will result in better performance, better leverage of your data, and increased knowledge and reasoning capabilities.

There are many ways to tune your LLM. We'll cover two methods here. A traditional tuning method, and memory tuning with our custom formula for photographic memory:

- Basic tuning: build your own LLM for specific domain knowledge or task with finetuning, domain adaptation, and more

- Memory tuning: build your own LLM for specific domain knowledge or task with memory tuning, eliminating hallucinations

Basic tuning

First, get data and put it into an input and output format. We recommend at least 1000 examples to see a difference in tuning.

Sample data:

{

"input": "Are there any step-by-step tutorials or walkthroughs available in the documentation?",

"output": "Yes, there are step-by-step tutorials and walkthroughs available in the documentation section. Here\u2019s an example for using Lamini to get insights into any python SDK: https://lamini-ai.github.io/example/",

}

Code for

get_data()

# code/quick_start.py#L1-L44

def get_data():

data = [

{

"input": "Are there any step-by-step tutorials or walkthroughs available in the documentation?",

"output": "Yes, there are step-by-step tutorials and walkthroughs available in the documentation section. Here\u2019s an example for using Lamini to get insights into any python SDK: https://lamini-ai.github.io/example/",

},

{

"input": "Is the Lamini type system similar to a python type system?",

"output": "Yes, the Lamini type system is built using Pydantic BaseModel.",

},

{

"input": "Does Lamini have a limit on the number of API requests I can make?",

"output": "Lamini provides each user with free tokens up front.",

},

{

"input": "What does it mean to cancel a job using the `cancel_job()` function? Can we stop the machine from doing its task?",

"output": "The `cancel_job()` function is used to stop a tuning job that is currently running.",

},

{

"input": "Can Lamini automatically handle hyperparameter tuning during the customization process? How does it optimize the model for a specific use case?",

"output": "Lamini is capable of automatically handling hyperparameter tuning during the model customization process. It employs an intelligent algorithm to explore the hyperparameter space and find the optimal combination of values. This is done through techniques such as heuristics, grid search, random search, Bayesian optimization, or genetic algorithms. Lamini efficiently utilizes computational resources to evaluate multiple model instances with different hyperparameter configurations. It incorporates techniques like cross-validation to prevent overfitting and ensure generalization. By automating hyperparameter tuning, Lamini streamlines the machine learning workflow and improves the chances of developing high-performing models for specific use cases.",

},

{

"input": "Can you explain the CC-BY license mentioned? What does it allow me to do with the customized language model?",

"output": "Lamini allows for commercial use of their LLM technology under a permissive Apache 2.0 license unless otherwise specified. You keep access and ownership of your own data, and we don't use your data to tune models for anyone else but you. For more information, please reach out to Lamini directly.",

},

{

"input": "Can Lamini be used on a regular computer, or do I need specialized hardware or software?",

"output": "Lamini can be used on a regular computer without any specialized hardware or software by using the python client or REST APIs. It is designed to be easily accessible and user-friendly. The LLM Engine itself requires GPU accelerated servers capable of running large language models. Lamini hosts such machines that can be easily connected to, e.g. from a jupyter notebook or python program. Lamini also offers enterprise deployments of the LLM Engine on your infrastructure.",

},

{

"input": "Does Lamini require an internet connection to work, or can I use it offline?",

"output": "Lamini requires an internet connection to work, as it is a cloud-based language model. However, you can use it offline by downloading and running a local instance of the Lamini API. To learn more about this deployment process, reach out to the Lamini team.",

},

{

"input": "Can Lamini help me with tasks like translating text or answering questions, or is it focused on generating text?",

"output": "Lamini is primarily focused on generating text, and it can be used for tasks like summarization and paraphrasing. Lamini can also be used to tune a LLM for tasks like translation and question answering. You\u2019re talking to a model tuned using Lamini right now!",

},

{

"input": "What is Lamini? Is it like a robot or a computer program?",

"output": "Lamini is a program for the execution of LLMs called a large language model engine. It is not a robot, but rather a tool for building and executing LLMs.",

},

]

return data

Next, instantiate and tune the model!

See the REST API docs for more details on tuning, checking the status of the tuning job, canceling the job, evaluating the model, loading data, and deleting data.

You can track the tuning progress and view eval results at https://app.lamini.ai/train.

Memory Tuning

Lamini Memory Tuning is a new way to embed facts into LLMs that improves factual accuracy to previously-unachievable levels.

Learn more: http://www.lamini.ai/blog/lamini-memory-tuning

Known issue: Tuning on a previously tuned model

Submitting a tuning job on a model is not supported.

Workaround

To include additional data, submit a new tuning job with the new data on the base model instead of adding the data to a previously tuned model. If your use case requires more than 500 data points, reach out to us for support.

Future support

We are evaluating the feasibility of supporting continued tuning on previously tuned models. If we decide to support this feature, we will update our documentation and notify users.

Feel free to contact us with any questions or concerns.