About

What is Lamini?

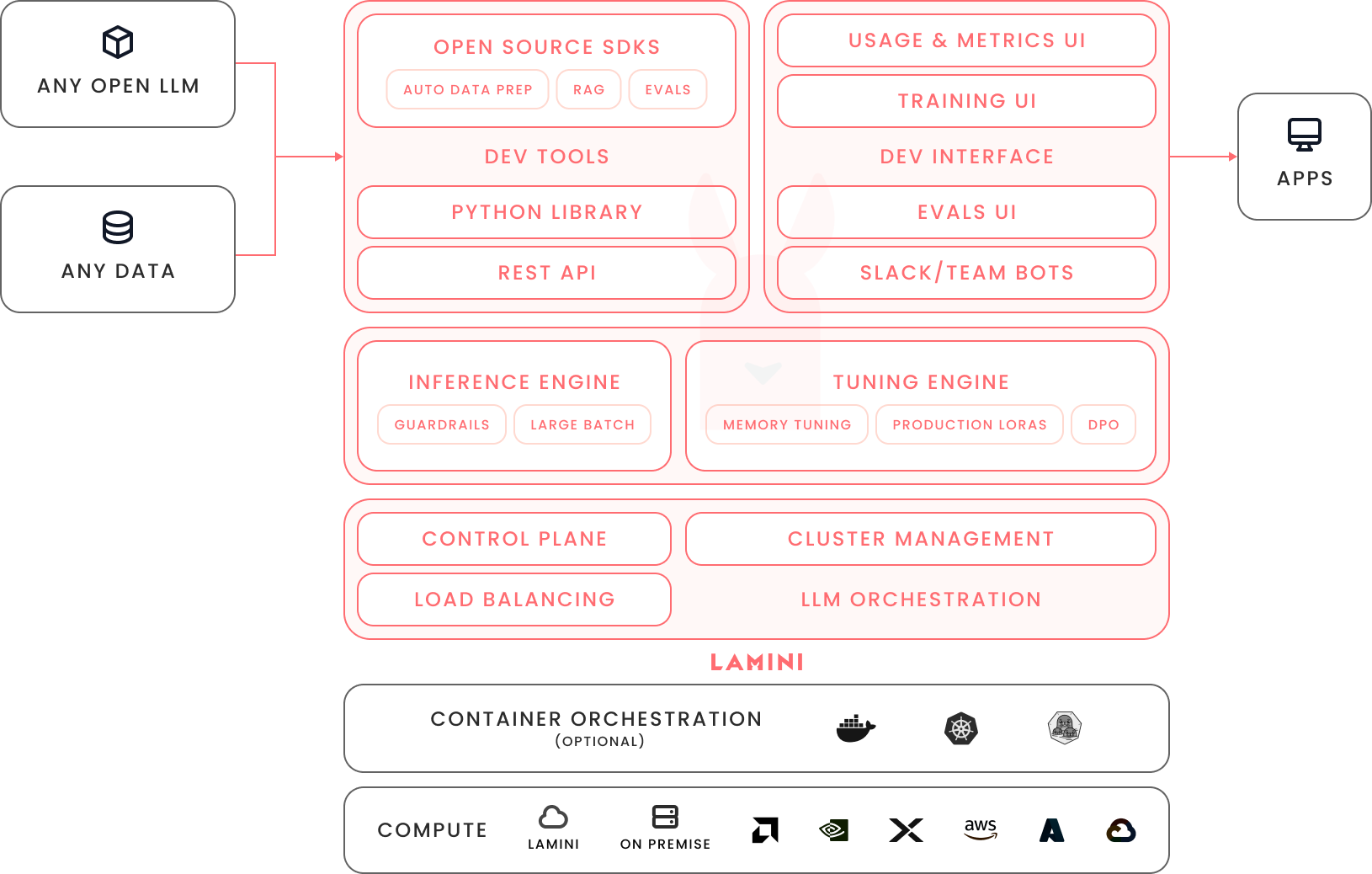

Lamini provides the best LLM inference and tuning for the enterprise. Factual LLMs. Up in 10min. Deployed anywhere.

The proprietary Lamini backend orchestrates GPUs to deliver exceptional LLM tuning and inference capabilities, which easily integrate into enterprise applications via the Lamini REST API, web UI, and Python client. The backend can run in your enviroment (on your own GPU infrastructure on premise or in your VPC), or you can use Lamini's supply of GPUs at (https://app.lamini.ai).

See for yourself: take a quick tour (with free API access!) to see how Lamini works, or contact us to run in your own environment.

What's unique about Lamini?

| Area | Problem | Lamini's solution |

|---|---|---|

| Tuning | Hallucinations | 95% accuracy on factual tasks: memory tuning |

| Tuning | High infrastructure costs | 32x model compression: efficient LoRAs |

| Inference | Rate limits | 52x faster than open source: iteration batching |

| Inference | Unreliable app integrations | 100% accurate JSON schema output: structured output |

Who are we?

Lamini's team has been finetuning LLMs over the past two decades: we invented core LLM research like LLM scaling laws, shipped LLMs in production to over 1 billion users, taught nearly a quarter million students about Finetuning LLMs, mentored the tech leads that went on to build the major foundation models: OpenAI’s GPT-3 and GPT-4, Anthropic’s Claude, Meta’s Llama 3, Google’s PaLM, and NVIDIA’s Megatron.

What's new?

Check out our blog for the latest updates.